| RWTH-BOSTON-50 Database |

Our sign language database for isolated sign language recognition is freely available for download (295 MB, FTP server).

You can also browse the sign language database, especially you should have a look at the readme file.If you want to publish results achieved on this database, you should cite the following work:

-

M. Zahedi, D. Keysers, T. Deselaers, and H. Ney. Combination of Tangent Distance and an Image Distortion Model for Appearance-Based Sign Language Recognition. In Deutsche Arbeitsgemeinschaft für Mustererkennung Symposium (DAGM), Lecture Notes in Computer Science, volume 3663, pages 401-408, Vienna, Austria, August 2005.

The National Center for sign language and Gesture Resources of the Boston University published a database of ASL sentences. Although this database has not been produced primarily for image processing research, it consists of 201 annotated video streams of ASL sentences.

The signing is captured simultaneously by four standard stationary cameras where three of them are black/white and one is a color camera. Two black/white cameras, placed towards the signer's face, form a stereo pair and another camera is installed on the side of the signer. The color camera is placed between the stereo camera pair and is zoomed to capture only the face of the signer. The movies published on the internet are at 30 frames per second and the size of the frames is 312*242 pixels. We use the published video streams at the same frame rate but we use only the upper center part of size 195*165 pixels because parts of the bottom of the frames show some information about the frame and the left and right border of the frames are unused.

To create the RWTH-BOSTON-50 database for ASL word recognition, we extracted 483 utterances of 50 words from this database. These utterances are segmented manually. The RWTH-BOSTON-50 database consists of 50 sign language words that are listed with the number of occurrences here:

IX_i (37), BUY (31), WHO (25), GIVE (24), WHAT (24), BOOK (23), FUTURE (21), CAN (19), CAR (19), GO (19), VISIT (18), LOVE (16), ARRIVE (15), HOUSE (12), IX_i far (12), POSS (12), SOMETHING/ONE (12), YESTERDAY (12), SHOULD (10), IX-1p (8), WOMAN (8), BOX (7), FINISH (7), NEW (7), NOT (7), HAVE (6), LIKE (6), BLAME (6), BREAK-DOWN (5), PREFER (5), READ (4),COAT (3), CORN (3), LEAVE (3), MAN (3), PEOPLE (3),THINK (3), VEGETABLE (3) VIDEOTAPE (3), BROTHER (2), CANDY (2), FRIEND (2), GROUP (2), HOMEWORK (2), KNOW (2),LEG (2), MOVIE (2), STUDENT (2), TOY (2), WRITE (2)

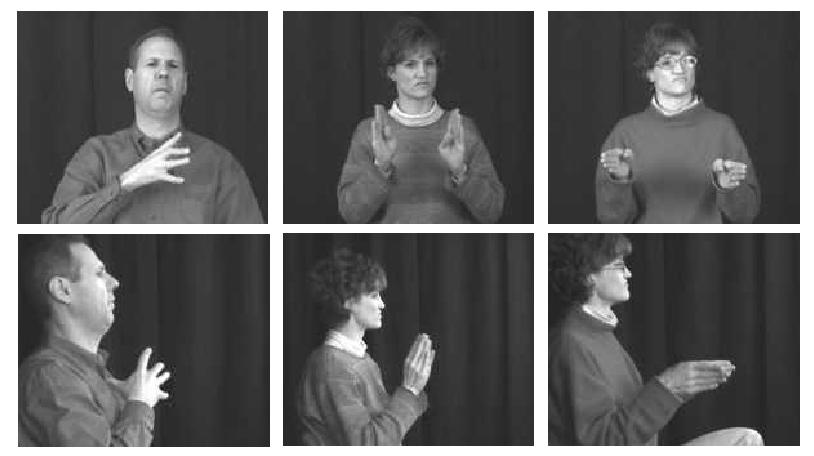

In the RWTH-BOSTON-50 database, there are three signers: one male and two female signers. All of the signers are dressed differently and the brightness of their clothes is different. We use the frames captured by two of the four cameras, one camera of the stereo camera pair in front of the signer and the other lateral. Using both of the stereo cameras and the color camera may be useful in stereo and facial expression recognition, respectively. Both of the used cameras are in fixed positions and capture the videos in a controlled environment simultaneously.

Some of the words significantly differ in their visual appearance, i.e. are pronounced in a different way. Therefore we manually labeled the pronounced words, e.g. BOOK was splitted into four classes book_, book1, book2, and book3. In total there are 83 pronunciations.

The signers and the views of the cameras are shown here:

|

The task is on this database is a leaving-one-out recognition over all sequences, where confusions between pronunciations of the same class are not counted as an error, i.e. book2 is confused with book1, no error is counted.

We trained a system with all sentences, where the leaving-one-out is realized such that each sentence has its own recognition lexicon where the sentence itself and its pronunciation are removed from the lexicon. (see partition-lexicons)

Furthermore we annotated the hand positions in every first frame in each sentence. (see handmarks, but not used in any publication so far)

Philippe Dreuw Last modified: Wed Jul 18 15:36:03 CEST 2007 Disclaimer. Created Wed Dec 22 18:04:32 CET 2004